This content is not available in your language... So if you don't understand the language... well, at least you can appreciate the pictures of the post, right?

This content is not available in your language... So if you don't understand the language... well, at least you can appreciate the pictures of the post, right?

Alguns dias atrás um amigo meu mostrou um vídeo chamado "Não Use Docker Compose em Produção! Eis o Porquê" do Erick Wendel que o YouTube recomendou. Eu uso Docker Compose em produção a mais de QUATRO ANOS, hospedando algumas aplicações que possuem mais de dois milhões de acessos mensalmente, e achei que o vídeo foca em coisas que são balelas com uma pitada de fearmongering (fomentador de medo), parecendo que o objetivo do vídeo foi apenas para vender cursos sobre Kubernetes.

No vídeo é recomendado usar Kubernetes, e bem, eu já usei usei k3s, e as vantagens que você ganha rodando k3s nas suas máquinas são pequenas comparadas com a dor de cabeça que você tem de manter o k3s na máquina. Já gastei horas tentando debugar problemas em minhas aplicações sendo que, no final, era problema no k3s. Exemplos incluem Diferenças entre o kubectl do k3s e o Kubernetes upstream e que, se você fizer bind de um serviço na porta 10010, você não conseguirá mais dar attach em serviços hospedados no k3s, e essa porta não é documentada em nenhum lugar.

Usar Kubernetes gerenciado pela AWS/GCP/Azure te tira essas dores de cabeça, mas você vai recomendar AWS/GCP/Azure para uma pessoa que está começando no ramo de empreendedorismo? Ela vai gastar muito mais dinheiro com empresas cloud ao invés de comprar uma VPS/máquina dedicada em uma hospedagem como a OVHcloud ou Hetzner! Para iniciantes, é melhor usar Compose e a pessoa descobrir o que ela precisava para a aplicação dela ao longo do tempo, e não colocar Kubernetes direto e ela ter um monte de coisa que, para ela, é desnecessária e pode até atrapalhar a aplicação dela.

This content is not available in your language... So if you don't understand the language... well, at least you can appreciate the pictures of the post, right?

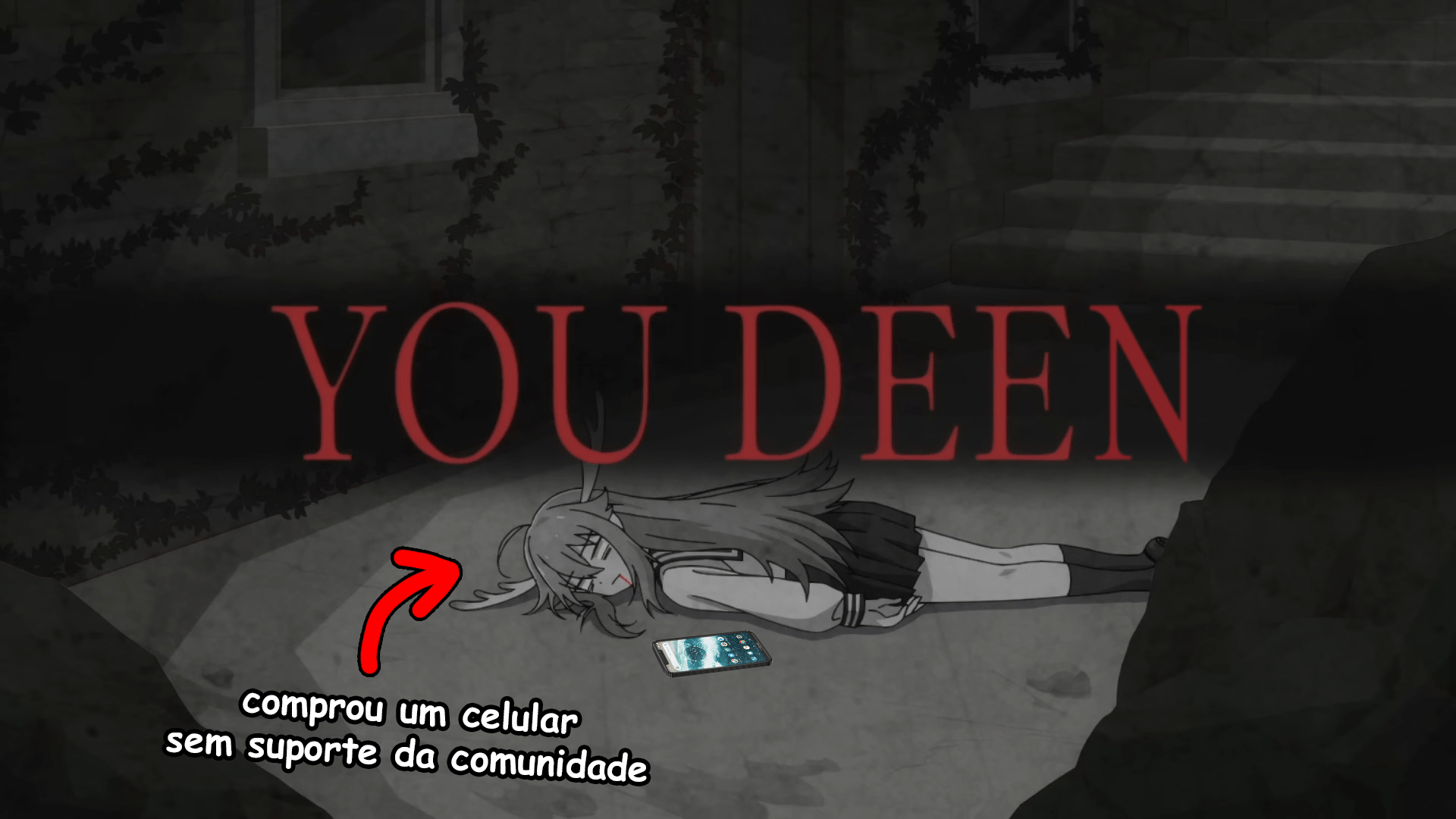

Você deve ter notado que este guia foi postado em pleno século 2024, mesmo que o Motorola One (também conhecido como "motorola one", "moto one", deen ou XT1941) tenha sido lançado no final de 2018... melhor tarde do que nunca!

Resolvi fazer isto pois recentemente eu usei o meu Moto G Turbo (merlin) para fazer dump dos meus TOTP do Authy, já que o Authy decidiu parar de suportar o app de desktop do Authy, e, por isso, eu tive vontade de desbloquear o bootloader e fazer traquinagens com o meu Motorola One.

Para contextualizar: Eu tenho o meu Motorola One desde o final de 2019, comprei ele pois o meu estágio exigia que a gente tivesse um celular para a empresa e, enquanto o meu Moto G Turbo ainda atendia as minhas necessidades, a bateria dele já estava indo de comes e bebes.

Como era um celular da empresa, eu tive que instalar todas aquelas travessuras que empresas gostam, como o tal do "Portal da Empresa" da Microsoft, e como o celular era "administrado" pela empresa, eu não podia nem atrever em fazer root nele.

Eu também tive um problema grave de lentidão com este celular, ele era lento mas ao ponto de ser inutilizável, coisa de "eu clico para abrir o teclado e leva uns 30 segundos para realmente abrir", o que era estranho pois enquanto o meu Moto G Turbo era lento, ele nunca era lento ao ponto de ficar não responsivo. Então eu sempre tive a curiosidade de saber se formatar o celular deixaria ele "bom" de novo. Eu não vou usar ele no meu dia-a-dia, mas se ele deixasse de ficar tão travado, eu pelo ou menos conseguiria usar ele para outras coisas.

Então decidi ir atrás disso... e aí eu me deparei com uma situação deplorável: Tem praticamente nada sobre modding dele na internet! Na época eu achava que o meu Moto G Turbo estava em uma situação difícil por ter sido apenas uma revisão do Moto G³ (2015) (osprey) que foi "mal amada" por ter sido lançada em algumas regiões, então a comunidade de modding dele era bem menor.

Mas pelo ou menos o Moto G Turbo tem TWRP oficial e já teve builds oficiais do LineageOS! Infelizmente o Motorola One não teve o mesmo luxo...

<Pantufa> Mas eu vi aqui e tem builds oficiais do TWRP para o Motorola One!

<Pantufa> Mas eu vi aqui e tem builds oficiais do TWRP para o Motorola One!

Não se engane! Existem outros celulares que também possuem a marca "Motorola One" (Motorola One Action (troika), Motorola One Vision (kane), Motorola One Zoom (parker)), e estes outros celulares possuem mais guias e recursos sobre modding para eles.

O Motorola One que estamos falando é o Motorola One, codenome deen, modelo XT1941, e ele parece ser um coitadinho esquecido pela comunidade, pois são poucos os guias que falam sobre como modificar ele.

E por isso eu decidi fazer o meu próprio guia.

* I'm not responsible for bricked devices, dead SD cards, thermonuclear war, or you getting fired because the alarm app failed (like it did for me...).

* YOU are choosing to make these modifications, and if you point the finger at me for messing up your device, I will laugh at you.

* Your warranty will be void if you tamper with any part of your device / software.

Neste guia, eu vou mostrar o que eu normalmente espero quando eu penso sobre "modificar o celular", ou seja:

Eu escrevi o guia como se este não fosse o seu primeiro rodeio na parte de modificação de celulares, mas ao mesmo tempo tentei deixar claro ao ponto de não ter dúvidas, deixando as dúvidas que restam algo que você consiga descobrir sozinho. Mas se tiver alguma dúvida, basta perguntar!

By default, LXD Virtual Machines do not preallocate their memory up to the limits.memory set. Instead, they allocate up to the maximum memory has needed during its lifetime, but they do not give back memory to the host system after it is freed, ballooning devices notwithstanding.

So, because the memory isn't given back to the host, why not preallocate the memory used by the VM? This way it is easier to reason about if you have enough memory in your system, instead of trying to think about "uuhh I do have 10240 megabytes free, but one of my VMs has a 6144 megabytes limit, and currently they are using 2048 megabytes, so actually we have 6144 megabytes free".

First, you need to shutdown your virtual machine...

lxc stop test-memoryalloc

Now, if you haven't already, let's set the memory limit for the virtual machine...

lxc config set test-memoryalloc limits.memory 8GB

And then we add QEMU's -mem-prealloc parameter to the VM!

lxc config set test-memoryalloc raw.qemu "\-mem-prealloc"

That's it! If you check how much memory the QEMU process for the test-memoryalloc virtual machine is using, you'll see that it will be using ~8GB.

And yes, you need to add the \ before the dash, because if you don't, LXD will complain about Error: unknown shorthand flag: 'm' in -mem-prealloc.

If you want to remove the -mem-prealloc parameter, you can remove it by using lxc config unset.

lxc config unset test-memoryalloc raw.qemu

Lately I've noticed that my nginx server is throwing "upstream prematurely closed connection while reading upstream" when reverse proxying my Ktor webapps, and I'm not sure why.

The client (Ktor) fails with "Chunked stream has ended unexpectedly: no chunk size" when nginx throws that error.

Exception in thread "main" java.io.EOFException: Chunked stream has ended unexpectedly: no chunk size

at io.ktor.http.cio.ChunkedTransferEncodingKt.decodeChunked(ChunkedTransferEncoding.kt:77)

at io.ktor.http.cio.ChunkedTransferEncodingKt$decodeChunked$3.invokeSuspend(ChunkedTransferEncoding.kt)

at kotlin.coroutines.jvm.internal.BaseContinuationImpl.resumeWith(ContinuationImpl.kt:33)

at kotlinx.coroutines.DispatchedTask.run(DispatchedTask.kt:106)

at kotlinx.coroutines.internal.LimitedDispatcher.run(LimitedDispatcher.kt:42)

at kotlinx.coroutines.scheduling.TaskImpl.run(Tasks.kt:95)

at kotlinx.coroutines.scheduling.CoroutineScheduler.runSafely(CoroutineScheduler.kt:570)

at kotlinx.coroutines.scheduling.CoroutineScheduler$Worker.executeTask(CoroutineScheduler.kt:750)

at kotlinx.coroutines.scheduling.CoroutineScheduler$Worker.runWorker(CoroutineScheduler.kt:677)

at kotlinx.coroutines.scheduling.CoroutineScheduler$Worker.run(CoroutineScheduler.kt:664)

The error happens randomly, and it only seems to affect big (1MB+) requests... And here's the rabbit hole that I went down to track the bug and figure out a solution.

If you have a dedicated server with OVHcloud, you can purchase additional IPs, also known as "Fallback IPs", for your server. Because I have enough resources on my dedicated servers, I wanted to give/rent VPSes for my friends for them to use for their own projects, but I wanted to give them the real VPS experience, with a real external public IP that they can connect and use.

So I figured out how to bind an external IP to your LXD container/LXD VM! Although there are several online tutorials discussing this process, none of them worked for me until I stumbled upon this semi-unrelated OVHcloud guide that helped me go in the right direction.

Even though I stopped using Proxmox on my dedicated servers, there were still some stateful containers that I needed to host that couldn't be hosted via Docker, such as "VPSes" that I give out to my friends for them to host their own stuff.

Enter LXD: A virtual machine and system containers manager developed by Canonical. LXD is included in all Ubuntu Server 20.04 (and newer versions), and can be easily set up by using the lxd init command. Just like how Proxmox can manage LXC containers, LXD can also manage LXC containers. Despite their similar names, LXD is not a "successor" to LXC; rather, it is a management tool for LXC containers. They do know that this is very confusing.

Keep in mind that LXD does not provide a GUI like Proxmox. If you prefer managing your containers through a GUI, you may find LXD less appealing. But for me? I rarely used Proxmox's GUI anyway and always managed my containers via the terminal.

Peter Shaw has already written an excellent tutorial on this topic, and his tutorial rocks! But I wanted to write my own tutorial with my own findings and discoveries, such as how to fix network issues after migrating the container, since that was left out from his tutorial because "it is a little beyond the scope of this article, that’s a topic for another post."

The source server is running Proxmox 7.1-12, the target server is running Ubuntu Server 22.04. The LXC container we plan to migrate is running Ubuntu 22.04.

Since I stopped using Proxmox on my dedicated servers, I found myself missing my VXLAN network, which allowed me to assign a static IP for my LXC containers/VMs. If I had a database hosted on one of my dedicated servers, an application on another dedicated server could access it without requiring to expose the service to the whole world.

Initially, I tried using Tailscale on the host system and binding the service's ports to the host's Tailscale IP, but this method proved to be complicated and difficult to manage. I had to keep track of which ports were being used and for what service.

However, I discovered a better solution: running Tailscale within a Docker container and making my container use the network of the Tailscale container! This is also called "sidecar containers".

If you are managing your Docker containers via systemd services, you may get annoyed that your container logs are logged to syslog too, which can churn through your entire disk space.

Here's how to disable syslog forwarding for a specific systemd service:

cd /etc/systemd

cp journald.conf [email protected]

nano [email protected]

In the [email protected] configuration, change

ForwardToSyslog=off

Then, in your service's configuration file (/etc/systemd/system/service-here.service)...

[Service]

...

LogNamespace=noisy

And that's it! Now, if you want to look at your application logs, you need to use journalctl --namespace noisy -xe -u YOUR_SERVICE_HERE, if you don't include the --namespace, it will only show the service's startup/shutdown information.

journalctl --namespace noisy -xe -u powercms

This tutorial is based off hraban's answer, however their tutorial is how to disable logging to journald and, because I didn't find any other tutorial about how to disable syslog forwarding for specific services, I've decided to make one myself.